Taking into account the pace at which innovation happens and technologies evolve in today’s world, software development is no less than an F1 race. Precision is critical, but speed can’t be forfeited either.

A lot more goes into the F1 racing strategy than meets the eye. There is no denying the skill of the drivers who battle it out on the tracks, but it takes an entire team to plan and execute a winning strategy. From designing the vehicle and its aerodynamics to deciding how much fuel to start with, or in which lap to refuel or change tires, and everything in between is critical to ensuring success. Similarly, success of the DevOps approach to software development and delivery rests on the premise of collaboration between stakeholders to accelerate the release of new software, while ensuring quality and optimizing costs. Delays can translate into lost business opportunities and jeopardize your competitive advantage. The key is to focus on continuous integration, continuous testing, and continuous delivery to ensure quality, reliability and speed.

The DevOps Pit Crew

The total race time in F1 also includes the time spent at pit stops, and hence the coordination and agility of the pit crew plays a very important role and can at times decide the fate of a world championship. The pit crew has just a few seconds to change tires, refuel, or make other necessary adjustments to address any vehicle performance issues that could impact the outcome of the race. It’s no different for DevOps. There are three key constituents of the DevOps pit crew – Software Engineering, Quality Assurance (QA), and Operations. While each of these three stakeholders has specific prerogatives and responsibilities, they ultimately need to work towards the shared objective of delivering the best quality software faster. Any oversight can result in delays, or bugs and defects slipping to production and being discovered by customers.

Let’s dig a level deeper to understand the role played by each constituent of the DevOps pit crew. Software engineers are tasked with shipping out new features to the market at an accelerated pace. However, before release the issues need to be passed by the QA team which lives by finding drawbacks and vulnerabilities in developer’s work. The objective QA is to not let any sub-quality code pass to the production stage. And kickbacks from QA, owing to bugs identified during testing, delay the release of new features. Moreover, the number of kickbacks from QA is a leading indicator of the quality of code developed by the Engineering team. With these seemingly conflicting goals how can Engineering and QA work in tandem? And where does the IT Operations team fit in the overall scheme of DevOps? Well, the IT Operations team is responsible for keeping the system up and running at optimal performance levels. Regular tasks including migration, updates, and maintenance of the infrastructure may at times interrupt the delivery of an IT service to end users, and the IT Operations teams are responsible for minimizing the end-user impact of such interruptions. And, Engineering, QA, and Operations together are responsible for DevOps success.

Winning the DevOps Race with Data-Driven Visibility

How can engineers ensure that they push better quality codes to QA and continually accelerate the delivery of new features? Or, how do they know which of the delivered features are the most impactful from the customers’ standpoint? These insights can help them prioritize features to pursue for the next releases. Similarly, how can QA teams identify defects or bugs at an early stage, and continually improve release quality? And, how can IT Operations teams improve system availability and reliability, ensuring seamless end-user experiences?

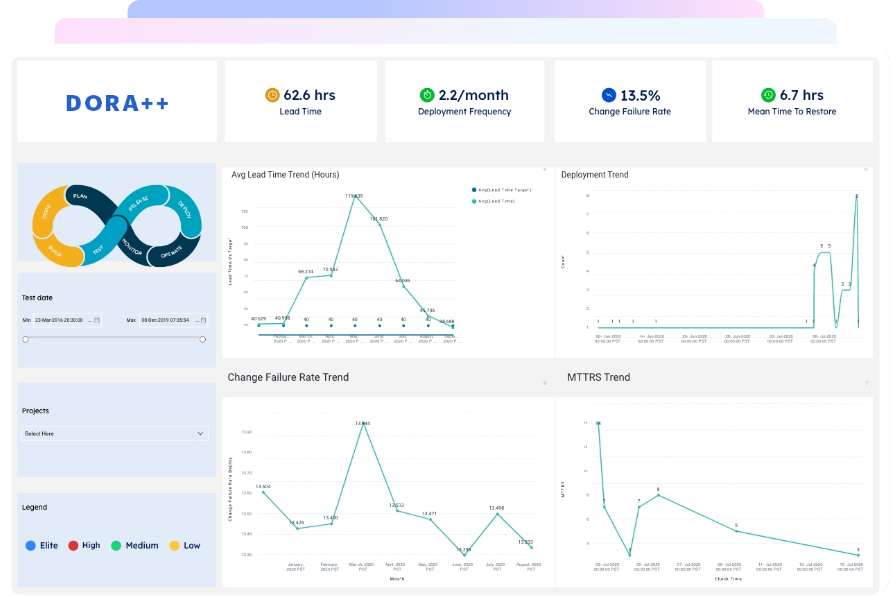

The F1 pit crew needs to be extremely vigilant to ensure that they don’t waste even a fraction of a second while servicing a car. Moreover, there is a team of race engineers that monitors the races from a control room and relays race strategy in real-time. Similarly, DevOps teams need real-time visibility into metrics such as Deployment Frequency, Speed of Deployment, and Lead Time for Changes, to ensure greater engineering efficiencies. Further, the time taken by the DevOps pit crew to resolve issues – Mean Time to Resolution (MTTR) and the Change Failure Rate – the proportion of changes or deployments that failed to achieve the desired outcome, are critical measures of software reliability and need to be tracked continuously to ensure uninterrupted delivery of software to customers.

The DevOps Research and Assessment Group (DORA), a part of Google Cloud, offers a comprehensive and easy-to-implement metrics framework that captures DevOps performance across two key parameters – speed and stability. It’s an approach that is already paying dividends to several DevOps teams across enterprises. The proportion of the highest rated performers, as per DORA’s ‘Accelerate State of DevOps 2019’ report, tripled from 2018 to 2019. While DORA provides a straightforward way to measure DevOps performance, implementing such a framework is often fraught with several challenges. To explore how to get started with implementing the DORA metrics, address common pitfalls, unify data across a complex toolchain, drill through and across metrics, and scale performance tracking across teams, watch our on-demand webinar – ‘DevOps DORA Metrics: Implementation Demystified’.